Positron’s unique compute and memory architecture allows for superintelligence to run in a box with its upcoming custom silicon targeting the highest performance/$ and performance/watt for frontier multimodal models.

Positron AI, the premier company for American-made semiconductors and inference hardware, today announced the close of a $51.6 million oversubscribed Series A funding round, bringing its total capital raised this year to over $75 million. The round was led by Valor Equity Partners, Atreides Management and DFJ Growth. Additional investment came from Flume Ventures, which includes tech icon Scott McNealy, Resilience Reserve, 1517 Fund and Unless.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250728912387/en/

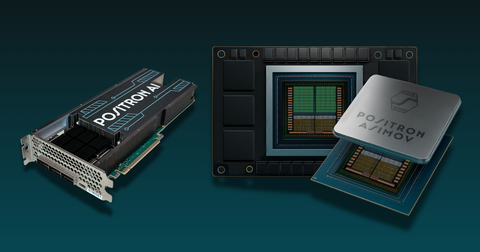

(Left) Positron’s first generation PCIe card based inference accelerators powering Atlas. (Right) Positron’s next generation Asimov accelerator coming in 2026.

This new funding will support the continued deployment of Positron’s first-generation product, Atlas, and accelerate the rollout of its second-generation products in 2026.

With global tech firms projected to spend over $320 billion on AI infrastructure in 2025, enterprises face intensifying cost pressures, power ceilings and chronic shortages of NVIDIA GPUs. Positron’s purpose-built alternative delivers cost and efficiency advantages that come from specialization. The company is currently shipping its first-generation product, Atlas, which delivers 3.5x better performance-per-dollar and up to 66% lower power consumption than NVIDIA’s H100. Unlike general-purpose GPUs, Atlas is designed solely to accelerate and serve generative AI applications.

“The early benefits of AI are coming at a very high cost – it is expensive and energy-intensive to train AI models and to deliver curated results, or inference, to end users. Improving the cost and energy efficiency of AI inference is where the greatest market opportunity lies, and this is where Positron is focused,” said Randy Glein, co-founder and managing partner at DFJ Growth. “By generating 3x more tokens per watt than existing GPUs, Positron multiplies the revenue potential of data centers. Positron’s innovative approach to AI inference chip and memory architecture removes existing bottlenecks on performance and democratizes access to the world’s information and knowledge.”

Positron Atlas’s memory-optimized FPGA-based architecture achieves 93% bandwidth utilization, compared to the typical 10–30% in GPU-based systems, and supports up to half a trillion-parameter models in a single 2-kilowatt server. It’s fully compatible with Hugging Face transformer models and serves inference requests through an OpenAI API compatible endpoint. Atlas is powered by chips fabricated in the U.S. and is already deployed in production environments, enabling LLM hosting, generative agents and enterprise copilots with significantly lower latency and reduced hardware overhead.

“Memory bandwidth and capacity are two of the key limiters for scaling AI inference workloads for next-generation models,” said Dylan Patel, founder and CEO of SemiAnalysis, and an advisor and investor in Positron. SemiAnalysis is a leading research firm specializing in semiconductors and AI infrastructure that provides detailed insights into the full compute stack. “Positron is taking a unique approach to the memory scaling problem, and with its next-generation chip, can deliver more than an order of magnitude greater high-speed memory capacity per chip than incumbent or upstart silicon providers.”

Capital-Efficient Execution and Technical Depth

Positron was co-founded in 2023 by CTO Thomas Sohmers and Chief Scientist Edward Kmett, with former Lambda COO Mitesh Agrawal joining as CEO to scale the company’s commercial operations. In just 18 months, the team brought Atlas to market with only $12.5 million in seed funding. They validated performance, landed early enterprise customers and hardened the product in deployment environments before raising this Series A.

Now, with growing adoption and a clear product roadmap, Positron is developing custom ASICs to unlock the next level of performance, power efficiency and deployment scale for inference.

“We founded Positron to meet the demands of modern AI: aiming to run the frontier models at the lowest cost per token generation with the highest memory capacity of any chip available,” said Mitesh Agrawal, CEO of Positron AI. “Our highly optimized silicon and memory architecture allows for superintelligence to be run in a single system with our target of running up to 16-trillion-parameter models per system on models with tens of millions of tokens of context length or memory-intensive video generation models.”

Early Enterprise Traction and Strategic Deployment

Positron's first publicly announced customers include Parasail (with SnapServe) and Cloudflare, alongside additional deployments within other major enterprises and leading neocloud providers.

A New Standard for American AI Infrastructure

With the Series A closed, Positron is now advancing its next-generation system, engineered specifically for large-scale frontier model inference. Titan, the follow-on to Atlas powered by Positron’s ‘Asimov’ custom silicon, will feature up to two terabytes of directly attached high-speed memory per accelerator, allowing for up to 16-trillion-parameter models to be run on a single system, and massively expanding context limits for the world’s largest models.

Titan will support parallel hosting of multiple agents or models, removing the traditional 1:1 model-to-GPU constraint that limits efficiency. Its over-provisioned, inference-tuned networking architecture will ensure low-latency, high-throughput performance even under heavy concurrency. With a standard data center form factor and no need for exotic cooling, the system is designed for seamless integration into existing infrastructures.

“We have passed on the overwhelming majority of AI accelerator startups we have diligenced over the last 6 years as most of them were mounting frontal assaults on NVIDIA that were unlikely to succeed. Positron has carefully chosen a defensible niche in low-cost inference. More importantly, they have proven that their software works before developing an ASIC: Positron is running competitively priced production inference workloads today on 2022 era FPGAs in a server of Positron’s own design. This speaks to the quality of their software stack, their system-level expertise and the judgment of their management team,” said Gavin Baker, managing partner and chief investment officer of Atreides Management.

About Positron AI

Positron AI is transforming generative AI inference with energy-efficient, high-performance compute systems built entirely in the United States. Headquartered in Reno, Nevada, with a remote-first team distributed across the country, Positron’s unique hardware architecture offers the lowest total cost of ownership for transformer models by solving the power, memory, and scalability bottlenecks of legacy infrastructure.

Learn more at www.positron.ai

View source version on businesswire.com: https://www.businesswire.com/news/home/20250728912387/en/

Contacts

Media Contact:

Helen Cho

Bonfire Partners

helen@bonfirepartners.io